ChatGPT -- DataCamp learning

Note from DataCamp on

Introduction to ChatGPT

- ChatGPT is generative AI, which is a subset of ML (ML is a subset of AI).

|

Artificial

intelligence

|

-

It generates the new contents by using patterns in the

information that it has learned before (a large language model).

-

What does it do?

-

Prompt (question, asking, request, action) – input

-

Throw it at the large language model (LLM)

-

LLM uses a complex algorithm to determine the pattern

and structure and create the response (generative)

-

Create a response as output

-

Limitation

-

LLM

-

Frequency and order of words (like a building block,

each block represents the word)

-

Many types of data are trained to build LLM

-

To create a model, a complex algorithm was used to understand

the pattern of the training data

-

The model is fine-tuned through responsiveness and

feed-back (I guess by humans? – like thumbs up/down when you see a response

that does/does not make any sense!

-

Potential bias due to the training data that was used

to build the LLM

-

Training data comes from

-

Books

-

Articles

-

Websites

-

As you may see, ChatGPT can track the context, so if we

create a relevant prompt, – the response will not be correctly

-

Thus, to use ChatGPT efficiently, - it is better to

talk about only one topic at a time.

-

Hallucinations occur when the LLM feels confident in

its response to the prompt and gives an answer that goes beyond its training

dataset.

-

Legal/ethical consideration – saying you want to create something with your

own intelligence but you used ChatGPT to generate it – soo who owns the

product? A person who provides the dataset to train GPT or person who used

ChatGPT or OpenAI – kinda gray area

-

Garbage in Garbage out: see older post - https://t-lerksuthirat.blogspot.com/2020/06/machine-learning-for-everyone.html

-

Thus, we need to write a good prompt that will result

in a better (more accurate) response.

-

Prompt engineering – the process of writing prompts to

maximize the quality and relevance of the response

-

Guideline

-

Clear and specific

-

Contain necessary information

-

Specify how long of the response that you would like it

to be - one page, one paragraph, or one sentence

-

Be concise

-

Remove information that does not provide useful context

-

Using correct grammar and spelling in the prompt –

ChatGPT uses grammar when interpreting the task

-

We can provide the sample to ChatGPT in order to

forcibly generate the response in a specific format

-

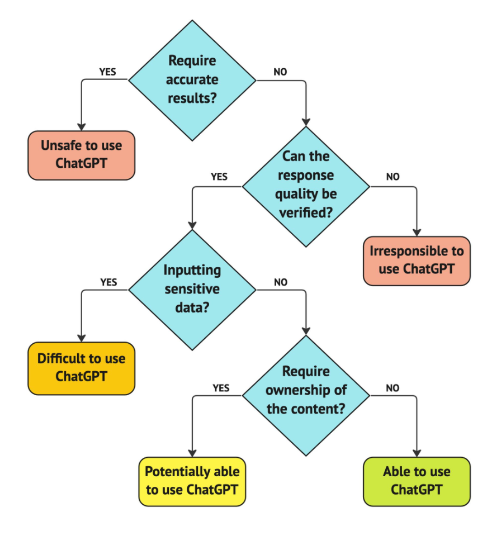

Use or not use ChatGPT – rule of thumb – use it when

you are able to verify the data generated from the ChatGPT.

Next step – what

drives the improvement

-

LLMs

-

Learning from a huge text dataset

-

It will increase

-

More complex will be learn, for example, sarcasm and

idiom

-

Algorithms detect patterns in text

-

Fine-tune the model by rating responses

-

Users help fine-tune the response - this will make the

model more human-like

-

Bias is the most challenging part – thus the model

should create high quality and balanced data.

-

Law and ethics

-

Misuse in ChatGPT

-

Creating malicious content

-

Misrepresenting AI-generated content (people are being

fooled by AI)

-

It is possible that ChatGPT will be more specialized.

Comments

Post a Comment